Neurocomputational Study Reveals Brain's Organization of Conversational Content

In a groundbreaking study published in *Nature Human Behaviour*, researchers from Osaka University and the National Institute of Information and Communications Technology (NICT) have unveiled significant insights into how the human brain organizes and processes conversational content. This research, which utilized functional magnetic resonance imaging (fMRI) alongside advanced large language models (LLMs) like GPT, aims to enhance our understanding of the neural mechanisms underpinning real-time dialogue and communication.

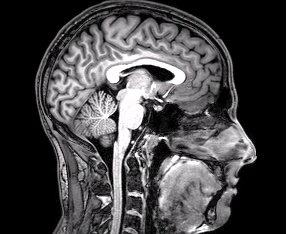

The study involved eight participants who were monitored during spontaneous conversations about designated topics. Their brain activity was tracked using fMRI, a neuroimaging technique that measures blood flow changes attributable to neural activity. According to Shinji Nishimoto, the senior author of the paper and a researcher at Osaka University, the long-term goal of this research is to elucidate how the human brain supports everyday interactions, particularly through language-based conversations—one of the most fundamental expressions of human intellect and social interaction.

"Recent advances in large language models such as GPT have provided the quantitative tools needed to model the rich, moment-by-moment flow of linguistic information, making this study possible," Nishimoto stated in an interview with *Medical Xpress* on July 3, 2025.

During the study, researchers transformed the conversational utterances of participants into numerical vectors using the GPT model, allowing them to analyze the linguistic hierarchy across varying timescales—from individual words to entire discourse. Masahiro Yamashita, the first author of the paper, elaborated that this approach facilitated the prediction of brain responses during both speech production and comprehension.

The findings reveal that the brain employs distinct neural strategies to integrate linguistic information depending on whether an individual is speaking or listening. "An increasing body of research suggests that the meanings of spoken and perceived language are represented in overlapping brain regions," Yamashita explained. "However, in real conversations, the challenge is distinguishing between what I say and what you say. Our study highlights how the brain organizes this distinction."

This research contributes to a growing body of evidence that underscores the complexity of language processing in the brain. By revealing how the brain organizes conversational content differently for production and comprehension, the study opens avenues for further exploration into the neural dynamics of human interaction. Future studies by Nishimoto and his colleagues aim to investigate how the brain selects what to say amid a plethora of potential responses, further unraveling the rapid and efficient decision-making processes inherent in natural conversations.

In conclusion, this neurocomputational study not only adds a crucial piece to the puzzle of linguistic processing but also has implications for the development of brain-inspired computational models. As the field of neuroscience continues to intersect with technology, the insights gleaned from this research could inform advancements in artificial intelligence, particularly in enhancing the capabilities of conversational agents designed to engage in human-like dialogue.

Advertisement

Tags

Advertisement